Rotten Tomatoes, the site that now so many people use as a basis to judge movies. Even studios themselves will use it, with tv spots for movies now bragging about the Rotten Tomatoes score they got. It has become a very important part of cinema, but should it be?

Rotten Tomatoes has serious problems as a platform. And no, I am not talking about that nonsense about them being biased. Sorry DC fans, but there is no plot to give DC movies bad reviews. Disney never paid off Rotten Tomatoes. One, Rotten Tomatoes doesn’t write reviews, they just aggregate them. Two, If Disney was paying off RT, don’t you think they would have had something done about the negative fan reviews for The Last Jedi?

No, the problems for RT go much deeper. I will be going through several issues I have with the site, and why we should take the Tomato-meter less seriously.

1. Their Reviews are not Weighted by Score

When RT creates its score, it only factors in one thing. Is the review positive, or negative? It is a very simple formula, too simple. A “Fresh” review, has a huge range. A critic can think a movie is just okay, but still, give it a “Fresh” review. But even if a critic rates a movie 6/10, it is deemed the same as a review that rates a movie a 10/10. There is no difference between a perfect movie, and an average movie, in the eyes of the Tomato Meter.

Now you might be saying “They do count actual ratings, they put the average score out of 10 under the Tomato-meter. But that is the problem, it is separate, and in much smaller print. If you want to look for it you can find it, but the thing everyone’s eye is drawn to, is the Tomato Meter, and for many, that is all they look at.

There has always been a problem with Movie Ratings overshadowing context. People do not read the reviews, they just scroll down to the bottom to see the rating. I have been guilty of doing it for games on IGN.com. But now it is even worse. Many people don’t look at the reviews, or even the rating, they look at the Tomato Score and judge the movie based on that.

And the reason this is a problem is it celebrates safe, often mediocre movies. The movies who get the highest ratings aren’t necessarily the best movies, but the most accessible movies. Safe crowd pleasers get more acclaim than artistic movies that take risks. Pixar Movies and Marvel movies get the benefit of most of their movies being in the 80-100 range, despite most of their ratings being in the 7 to 8 range.

It is a lazy way of aggregating movie reviews. Metacritic, on the other hand, does weight their score. Just by adding one more category, their scores are so much more accurate. Metacritic has three categories, that are color coded. green is favorable, yellow is middling, red is negative. But they also take the actual rating into consideration, so there are so many more layers of nuance to the final score. They even have a conversion chart for how ratings factor into the score.

Is it a perfect system? No, but it is far superior to the Rotten Tomatoes formula. Rotten Tomatoes rating system is the same as taking a pass/fail course in College. As long as you show up, and do not fall asleep, you are probably going to pass.

2. What is “Fresh” or “Rotten” seems to be Arbitrary

Okay, so they don’t factor in the ratings, and only if it is Fresh, or Rotten. Let’s accept that for now. But, if that is going to be the case, one should think they should at least be consistent about what is Fresh, and what is Rotten. But, that just is not the case.

Here is a review for Black Panther, deemed as “Fresh.” But he rated it a 2/5, and the words he used to describe it, imply that he did not like it very much. In what world is a 2 out of 5 a positive review? Then, there is something like this.

Now I am no mathematician, but I am pretty sure 2.5/4, is more than 2/5. One is 62%, which by Rotten Tomatoes score, would be “Fresh.” The other is a 50%. Now I did some digging and found out that how they decide “Fresh,” vs “Rotten,” is they let the critic decide. On paper, that sounds like a fine idea, but it raises an issue. It makes the whole system inconsistent. There need to be standards for what is “Fresh,” and what is “Rotten.” Without standards, then the Tomato Meter means nothing. It already has so little nuance, and this laziness in deciding what is positive or negative makes it even less so.

3. Fan reviews are Susceptible to Spamming

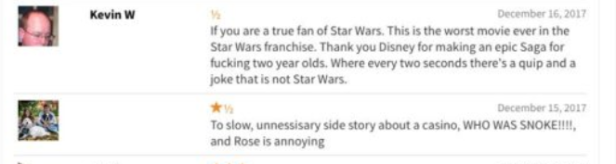

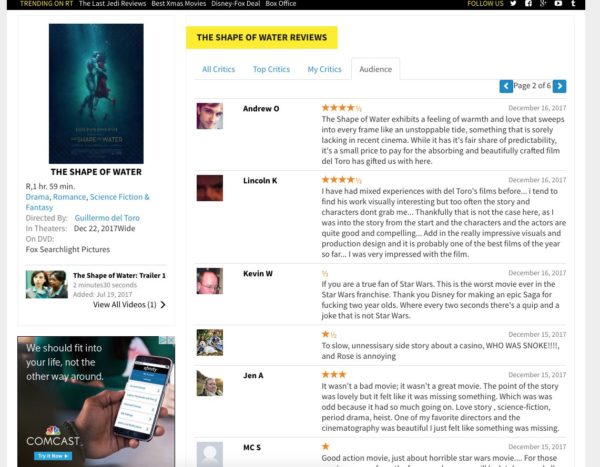

Having a way to measure what an audience though vs what critics thought is important. We see the discrepancy between fans and critics opinions all the time. And I do not think we should just write off all fans as mindless. But the way RT handles these fan reviews is a problem. Take a look at these fan reviews.

These people clearly did not like The Last Jedi. And they are of course entitled to their opinion. but there is one problem.

These reviews for The Last Jedi were under The Shape of Water. And this is just the tip of the iceberg, there were negative reviews for The Last Jedi all over different pages. The Force Awakens had many new reviews at the time, that was clearly about The Last Jedi. Also, many of these fans making these negative reviews, have no account. Seriously, go to The Last Jedi Audience reviews, and start clicking on accounts that gave negative reviews. You will find a few interesting things. Many people have only one review, and it is for The Last Jedi. Even more, have no account at all. So an account was created just to give a negative review and then deleted from existence.

Now is it possible that The Last Jedi was so bad it made fans lose their minds, post negative reviews on wrong pages, creates accounts for the sole purpose of lambasting The movie, and then delete the account? Sure, but it seems more likely, that some of them, are bots. Rotten Tomatoes has denied this multiple times, but we are talking about the company too lazy to weight their scores, I don’t trust them to actually investigate phony accounts. When several pages that are not The Last Jedi, are being spammed with reviews that are not The Last Jedi, there is clearly a problem.

But god forbid Rotten Tomatoes put any effort into monitoring their website. The solution is simple, websites have been creating methods to stop spammers for a long time. One, you need to make people confirm their accounts. it won’t stop all spammers, but the one extra step will stop some. There are ways to ensure that profiles have real people attached to them, without violating privacy.

4. Too Much Influence

And the culmination of all of this is the biggest problem. Despite these issues, Rotten Tomatoes has a ton of power. They are the review aggregate site. The Tomato Meter has become the benchmark for if movies are good or not.

And this can hurt movies. There is actual evidence of bad Tomato Meter scores hurting movies in the second Black Panther, they were put on blast, and it should not be like that.

If RT is going to wield this much power, they need to do so wisely. But they do not. They take the laziest approach to compiling reviews.